What is Retrieval Augmented Generation?

Building an AI with a Memory

“Sure, you’ve heard of RAG. But have you actually used it effectively?”

Retrieval-Augmented Generation or RAG is not a new buzzword anymore. It has been discussed in countless conferences, proof-of-concepts, and AI strategy slides. For some, it might even feel like yesterday’s innovation.

But here’s the reality we see every week at Plainsight: while almost every organization has heard of RAG, very few are actually using it to its full potential.

Many companies have experimented with generative AI pilots or chatbots, but the real value creating an AI that taps into your organization’s knowledge and applies it intelligently remains largely untapped. We still see powerful, practical use cases in client projects that are either unimplemented, inefficiently set up, or disconnected from business outcomes.

So it’s never too late to talk about RAG. In fact, for most enterprises, now is the time to get it right moving from experimentation to enterprise-grade design and best practices.

So, whether you’re just starting or refining your AI strategy, here’s what you need to know about building an AI with RAG.

“When key knowledge walks out the door”

Every organization has that one person. The colleague who knows everything, the tool configurations, the legacy workflows, the corner-cases. When that expert goes on holiday, or moves on, the inevitable happens: the questions stop getting answered, productivity stalls, and the team scrambles.

Similarly, many organizations sit on a gold-mine of documents: wikis, PDFs, emails, transcripts, manuals. Yet the right answer is often buried in the digital haystack. Employees spend hours hunting. Processes slow down. Innovation gets delayed.

In both cases the root challenge is the same: your knowledge is fragmented, siloed, under-utilised.

What if you could build an AI assistant that not only speaks your language, but remembers your context and pulls from your company’s knowledge base in real time? Enter Retrieval-Augmented Generation (RAG): in essence, AI with memory.

Why Traditional Generative AI Isn’t Enough

Generative AI tools (large language models, chatbots) are fantastic at writing, brainstorming, and summarizing, but they often lack one thing: organizational context. They don’t know your tools, your policies, or your data. They don’t know what you know.

The result? Lots of promises, but limited usefulness in enterprise-scale, knowledge-intensive settings. You end up asking generic questions, getting vague answers, or worse, getting incorrect answers.

RAG changes that dynamic. It brings the best of two worlds: the natural-language generative power of LLMs plus the retrieval of your internal knowledge. In practice, it means your AI isn’t working from a generic model alone, it’s working from your data.

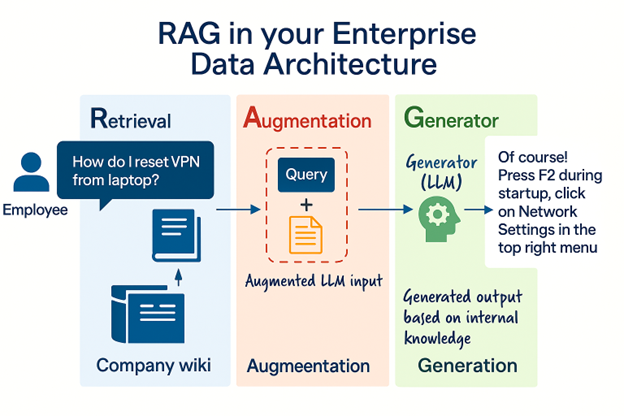

How RAG Works (Without the Technical Jargon)

1. Retrieval: when you ask a question, the RAG system searches your internal knowledge sources (documents, manuals, emails, logs) for relevant content.

2. Generation: the system then uses that content as context to craft an answer tailored to your question, with traceability to the source.

Put simply: your AI accesses your intranet (and more) and presents an answer in conversational form. It’s not just “what the model knows”, it’s “what your company knows”.

Why This Matters at Executive Level

For senior leaders, RAG isn’t about experimenting with new technology; it’s about making a strategic shift in how the organization manages knowledge:

Operational efficiency: Employees spend less time hunting for answers and more time executing.

Knowledge resilience: Your expert goes on holiday or leaves, but the knowledge stays.

Confidence & compliance: With traceable sources and context, you reduce risk of misinformation or regulatory exposure.

Agility: Change a process, update your documentation, your AI reflects it immediately (without retraining the model).

In short: you’re not just building a “chatbot”. You’re building a living knowledge assistant that scales human expertise across the organization.

Embedding RAG into Your Data Strategy

We see a lot of our customers treat RAG like a plug-and-play toy. 'Set it and forget it' is often the narrative. But that's where it often goes wrong. From our experience, here are some guidelines on doing it right:

Unstructured information readiness: Documents, meeting notes, emails, these need to be accessible, indexed and appropriately governed.

Governance & trust: Treat internal knowledge like any other critical data asset — with clear ownership, continuous quality checks, and compliance controls to keep the information reliable and secure.

AI integration: The generative AI piece must be combined with retrieval logic, embedding and indexing.

Use case prioritization: Choose the high-impact scenarios first (internal helpdesk, legal Q&A, product support), then scale.

When organizations do this, they move from “experimenting with AI” to “operationalizing knowledge at scale”.

If you’re curious how this looks in practice, read our related article “From Data to Dialogue: How to Build RAG and Agentic Chatbots on Azure”. It explains the technical and architectural steps behind what you’ve just read using real Azure components like Cognitive Search, OpenAI, and custom APIs.

A Closing Thought

Generative AI without RAG is like a brilliant consultant who arrives with zero knowledge of your company, full of ideas, but no context. With RAG, that consultant gets hold of the context. It knows your tools, your data, your history.

In the coming years the competitive edge won’t come from simply using the largest model. It will come from how well organizations use that model with their own knowledge. Because in the age of intelligent systems, what you know matters, and how your AI remembers it will differentiate you.

At Plainsight, we’ve guided many organizations through that journey. From the first pilot to production-ready solutions. We’ve seen firsthand how RAG transforms information into real business intelligence, and we’re always keen to help your company take that next step, too.

If you’re exploring how to give your AI a memory of its own, we’d love to start that conversation.