From Data to Dialogue: How to build RAG and Agentic Chatbots on Azure

In today’s AI landscape, chatbots are evolving far beyond basic Q&A. From enhancing customer experience on websites to streamlining internal workflows, organizations are tapping into the power of generative AI to turn static information into dynamic conversations. At several client engagements, we’ve helped design and deploy chatbot solutions that range from retrieval-augmented generation (RAG) systems to more advanced agentic architectures, depending on their specific needs.

In this post, we’ll walk through how we’ve built both types of bots using the Azure ecosystem, explore when each approach makes sense, and share some lessons learned from the field.

RAG: Grounding Chatbots in Your Data

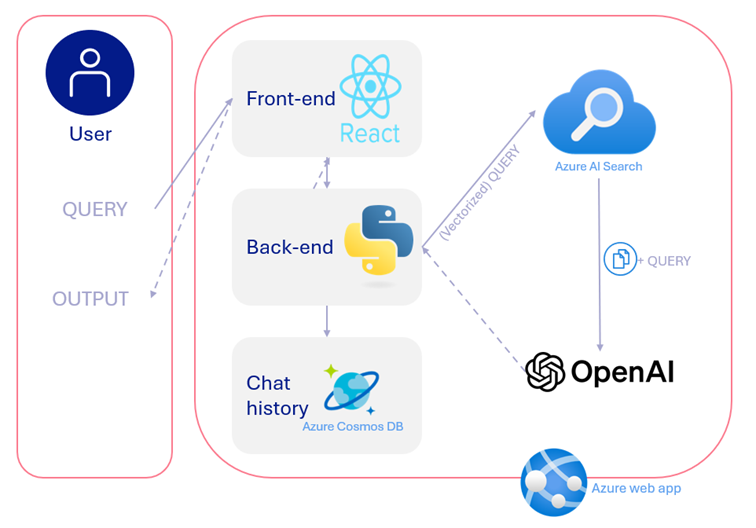

Retrieval-Augmented Generation (RAG) is a commonly used architecture for domain-specific chatbots. The idea is to augment a large language model (LLM) like GPT with a (vectorized) search engine that pulls in relevant custom data on demand. This helps the model give grounded, accurate answers without needing to retrain it with new content.

At one client site, we built a public-facing RAG chatbot for a retail company that wanted to improve how users interact with their product catalog. The solution allows shoppers to ask questions like:

“What’s a good gift for someone who likes minimal design?”

“What’s the best way to clean this material?”

“What pieces of jewelry go well with this dress?”

To support this, we used:

Azure AI Search enhanced with semantic ranking and hybrid vector search for more relevant, context-aware document retrieval

Azure OpenAI Service for LLM-generated natural language answers

A custom web app hosted on Azure to serve the front- and back-end interfaces

This hybrid solution allowed us to tap into both keyword precision and deep semantic understanding, ensuring that queries return relevant product data even for open-ended or inspirational prompts. Updates to the product catalog are immediately reflected without needing to rework the underlying model, thanks to the retrieval-based design and a daily scheduled azure function updating the search indexes.

When to use RAG:

You want to ground your chatbot in a known corpus (docs, FAQs, product data)

Accuracy and traceability are important

You need fast deployment with manageable complexity

Agentic Chatbots: From Information to Action

While RAG is excellent for answering questions, some use cases demand more interactivity, reasoning, and/or automation. This is where agentic chatbots shine.

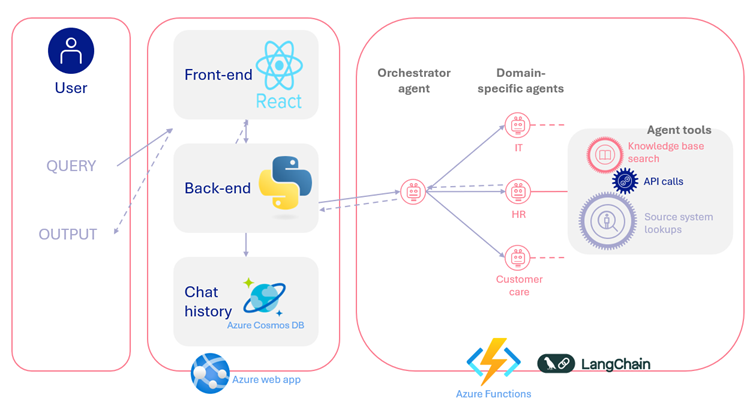

At another client engagement, we started with a RAG-based internal assistant designed to answer questions based on a department-specific knowledge base. Over time, we saw that users (especially Customer Care and HR) were spending significant time on repetitive lookups and crafting nuanced replies. So we evolved the solution into an agentic chatbot that could not only answer but also execute routine tasks autonomously.

Using LangChain and hosted within Azure, this chatbot now:

Performs live lookups to source systems (e.g., order status, remaining vacation days)

Helps draft personalized responses to employee questions, adjusting for tone, formality, and intent

The result is a much more intelligent assistant that doesn’t just provide information but supports real tasks, saving valuable time while maintaining a human-like conversational tone.

Key Azure components used:

LangChain for orchestrating tools and agents

Azure Functions to encapsulate the agent functionality and perform secure backend operations like API lookups

Azure OpenAI for the conversational layer

When to use an agentic chatbot:

The bot needs to perform actions beyond answering (e.g., lookups, updates)

You want to automate multi-step tasks or workflows

Users need personalized, context-sensitive responses

Lessons Learned from the Field

Across both projects we’ve picked up some valuable insights:

Start simple. A well-designed RAG system can go a long way. It often delivers 80% of the value with 20% of the complexity.

Data quality directly impacts retrieval performance. Poorly structured, outdated, or noisy data leads to weak results, no matter how advanced your vector search setup is. High-quality and semantically consistent data is critical for accurate retrieval and even then, expect to iteratively tune embedding strategies and ranking methods to get consistently relevant responses.

Azure’s native integrations help a lot. Azure AI Search, Functions, OpenAI and secure identity tools give you the flexibility to scale without reinventing the wheel.

User experience matters. Whether the chatbot is internal or external, the interface and tone make a big difference in adoption and trust.

Plan for iteration. Both types of bots benefit from feedback loops, analytics, and ongoing refinement as users engage and new needs emerge.

Conclusion

RAG and agentic chatbots represent two ends of a spectrum: one focused on accessing knowledge, the other on executing actions. At many organizations, the journey begins with a simple RAG bot and evolves toward agentic capabilities as user needs mature.

Azure provides a robust foundation for both approaches, whether you’re building a product advisor for customers or an intelligent assistant for your team. With the right architecture, you can turn raw data into meaningful dialogue and empower users with AI that doesn’t just talk, but helps.

Curious how this could work for your organization?

Reach out to Plainsight to explore how we can support your data and AI journey. Or, if you’d like to explore the topic further, join me at dataMinds Connect on the 7th of October, where I’ll be speaking about building RAG and agentic chatbots on Azure in more detail.

Want to implement this in your workflow, too?

Sofie is a Data Engineer specializing in AI and scalable data solutions.